Predicting Housing Prices: Regression Model Comparison

In this project I built and compared two regression models' performance in predicting housing prices from a dataset found on Kaggle. The first model was a Ridge Regression, hypertuned to enhance performance. The second model was an ensembled model: Bayesian Additive Regression Tree (BART).

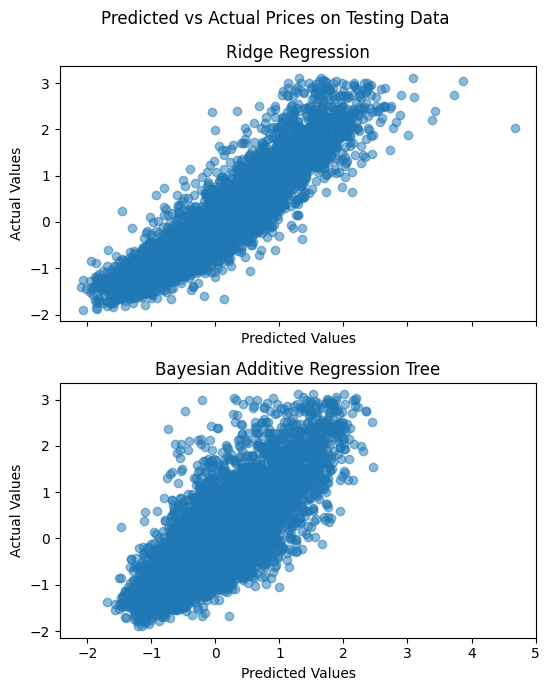

The biggest challenge I faced in this project was learning and using the PyMC framework to train a BART model. I was curious to see how ensembling a decision-tree-based "weak-learner" model would compare against a very simple regularization-based model. I was surprised to see that ridge regression outperformed BART, but the scatter plots of both models' predicted vs actual prices reveal that an improvement could potentially be made on ridge regression with a slightly more flexible model such as polynomial.